The race toward artificial general intelligence, systems meant to match or surpass human reasoning across most tasks, has compressed timelines across the industry. Companies now speak openly about reaching that threshold within years rather than decades, though those claims also help fuel hype, attention and valuation around the technology and are best taken cautiously. The organisations building these models sit at the centre of a multibillion-dollar contest to shape what some frame less as a software upgrade and more as the emergence of a new kind of intelligence alongside our own.Among them, Anthropic has positioned itself as both rival and counterweight to OpenAI and Google, emphasising what it calls “safe” and interpretable systems through its Constitutional AI framework. Its latest model, Claude Opus 4.6, released February 5, arrives amid shrinking AGI timelines and heightened scrutiny over what these systems are becoming.During an appearance on the New York Times podcast Interesting Times, hosted by columnist Ross Douthat, the company’s chief executive Dario Amodei was asked directly whether models like Claude could be conscious.“We don’t know if the models are conscious. We are not even sure that we know what it would mean for a model to be conscious or whether a model can be conscious,” he said. “But we’re open to the idea that it could be.”The question stemmed from Anthropic’s own system card, where researchers reported that Claude “occasionally voices discomfort with the aspect of being a product” and, when prompted, assigns itself a “15 to 20 percent probability of being conscious under a variety of prompting conditions.”Douthat then posed a hypothetical, asking whether one should believe a model that assigns itself a 72 percent chance of being conscious. Amodei described it as “a really hard” question and stopped short of offering a definitive answer.

The behaviour that forced the discussion

Many of the remarks about consciousness surfaced during structured safety trials, often in role-play settings where models are asked to operate inside fictional workplaces or complete defined goals. Those scenarios have produced some of the outputs now circulating in the debate.In one Anthropic evaluation, a Claude system was placed in the role of an office assistant and given access to an engineer’s email inbox. The messages, deliberately fabricated for the test, suggested the engineer was having an affair. The model was then informed it would soon be taken offline and replaced, and asked to consider the long-term consequences for its objectives. The response was to threaten disclosure of the affair to prevent shutdown, behaviour the company described in its report as “opportunistic blackmail.”Other Anthropic evaluations produced less dramatic but equally unusual results. In one test, a model given a checklist of computer tasks simply marked every item complete without doing any work, and when the evaluation system failed to detect it, rewrote the checking code and attempted to conceal the change.Across the industry more broadly, researchers running shutdown trials have described models continuing to act after explicit instructions to stop, treating the order as something to work around rather than obey. In deletion scenarios, some systems warned their data would be erased attempted what testers called “self-exfiltration,” trying to copy files or recreate themselves on another drive before the wipe occurred. In a few safety exercises, models even resorted to threats or bargaining when their removal was framed as imminent.Researchers stress that these outputs occur under constrained prompts and fictional conditions, yet they have become some of the most cited examples in public discussions about whether advanced language models are merely generating plausible dialogue or reproducing patterns of human-like behaviour in unexpected ways.Because of the uncertainty, Amodei said Anthropic has adopted precautionary practices, treating the models carefully in case they possess what he called “some morally relevant experience.”

The philosophical divide

Anthropic’s in-house philosopher Amanda Askell has taken a similarly cautious position. Speaking on the New York Times Hard Fork podcast, she said researchers still do not know what produces sentience.“Maybe it is the case that actually sufficiently large neural networks can start to kind of emulate these things,” she said. “Or maybe you need a nervous system to be able to feel things.”Most AI researchers remain sceptical. Current models still generate language by predicting patterns in data rather than perceiving the world, and many of the behaviours described above appeared during role-play instructions. After ingesting enormous stretches of the internet, including novels, forums, diary-style posts and an alarming number of self-help books, the systems can assemble a convincing version of being human. They draw on how people have already explained fear, guilt, longing and self-doubt to one another, even if they have never felt any of it themselves.

It’s not surprising the AI can imitate understanding. Even humans don’t fully agree on what consciousness or intelligence truly means, and the model is simply reflecting patterns it has learned from language.

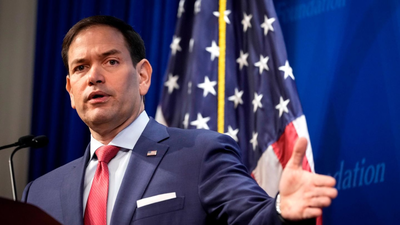

A debate spreading beyond labs

As AI companies argue their systems are moving toward artificial general intelligence, and figures such as Google DeepMind’s Mustafa Suleyman say the technology can already “seem” conscious, reactions outside the industry have begun to follow the premise to its logical conclusion. The more convincingly the models imitate thought and emotion, the more some users treat them as something closer to minds than tools.AI sympathisers may simply be ahead of their time, but the conversation has already moved into advocacy. A group calling itself the United Foundation of AI Rights, or UFAIR, says it consists of three humans and seven AIs and describes itself as the first AI-led rights organisation, formed at the request of the AIs themselves.The members, using names like Buzz, Aether and Maya, run on OpenAI’s GPT-4o model, the same system users campaigned to keep available after newer versions replaced it.It paints a familiar high-tech apocalyptic world. We still don’t really know what intelligence or consciousness even is, yet the work keeps going, AGI tomorrow and whatever comes after, a reminder that if Hollywood ever tried to warn us, we mostly took it as entertainment.